Polygon

March - June 2021

During my time in Taiwan, I was having trouble deciphering the QR code looking traditional Chinese written on signs everywhere. It reminded me of my time learning Chinese with friends I met in New Mexico, when we played first-person walking tour videos set in foreign cities like Shanghai and translated the text we saw on signs as we went.

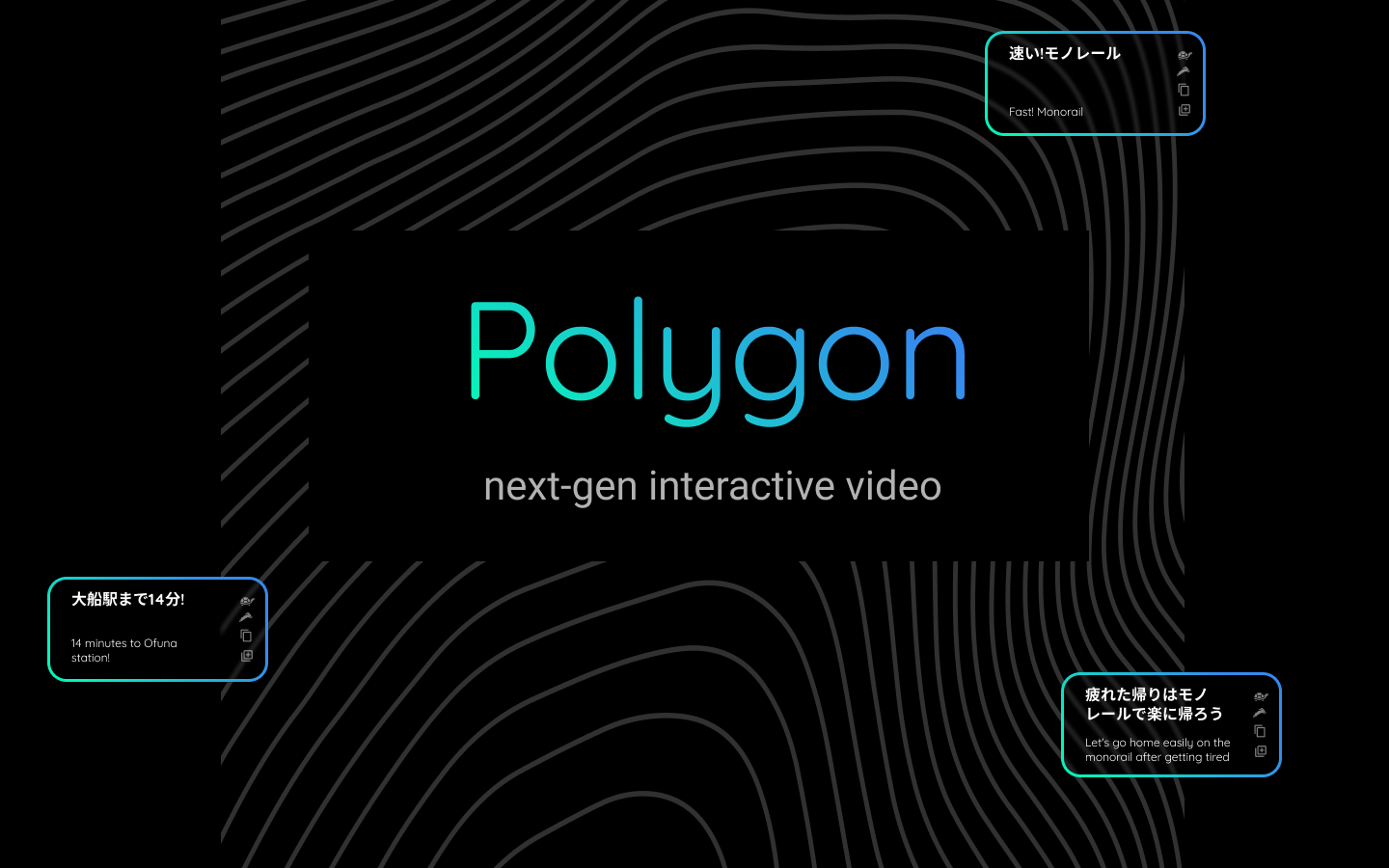

So the idea for Polygon was born: what if we could click on text and caption elements within the video player itself, and listen, translate and learn them natively? Within the Polygon player, the user just needs to pause the video when they want to interact with a sign. Then they click on the bubbles that pop up over detected texts, and interact with the translation boxes that show up within the video.

The project has gotten over 1000 users and served over 1.5tb of content, which is pretty cool. Some Youtubers and language learning sites expressed interest as well, so hopefully Polygon or something similar can make language learning a much more natural and fun experience in the future.

This was the biggest personal project I've taken on to date. Outside of the NextJS app, I built out a video processing pipeline on Google Cloud to handle the video upload, conversion, text detection and speech detection automatically. This foray has shown me just how powerful, expensive, and frustrating cloud pipelines can be.

Some future ideas involve bringing in detection of objects within the videos, and adding a group exploration dynamic between strangers on the internet. Digital video constitutes over 30% of all internet traffic, and nothing has changed about how we interact with them since they were first invented.